Image Source - Nano Banana

My Sunday afternoon scroll through of tech news led me to this article, Taco Bell rethinks AI Drive Through after man orders 18,000 cups of water. My first reaction was to the absurdity of the number. 18,000 cups? No one could possibly have that much patience, because I certainly don't. But this incident made me wonder: are we already experiencing AI fatigue?

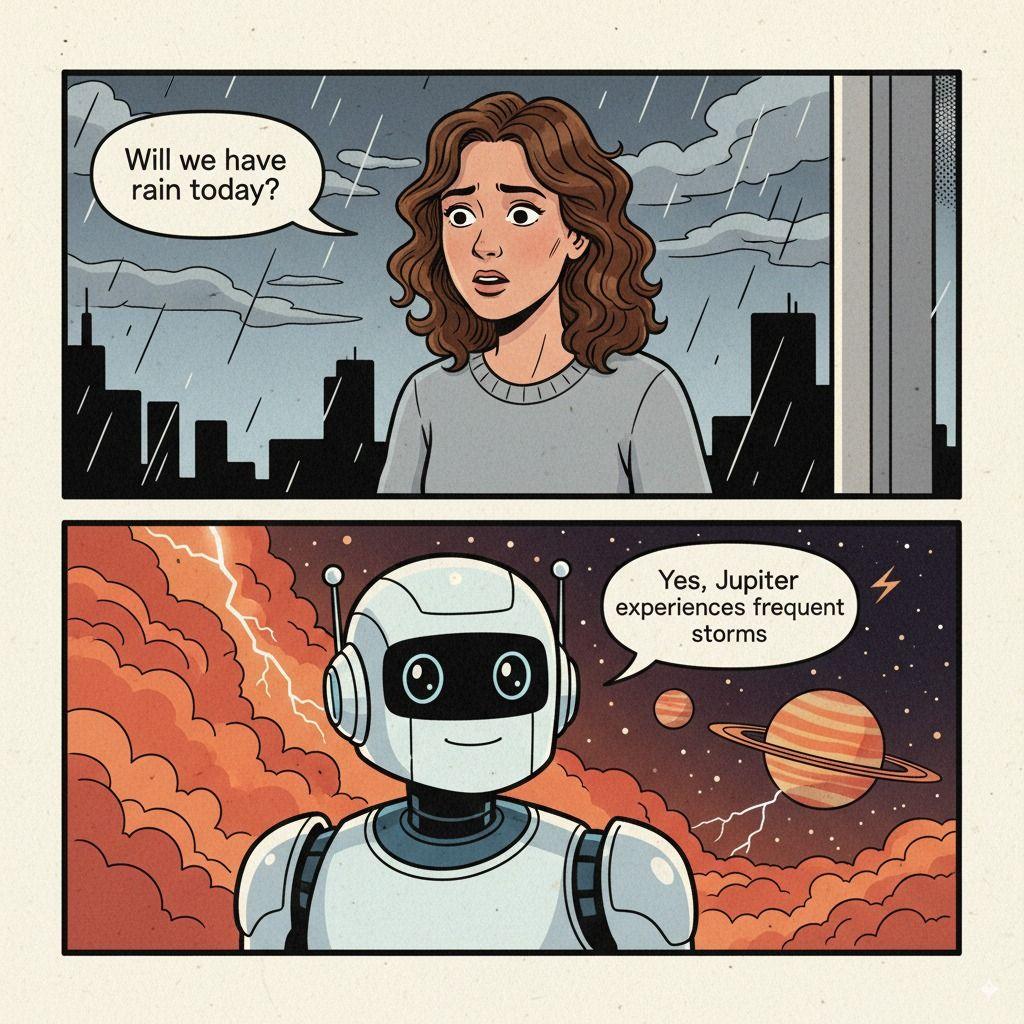

The image of someone gaming a drive-through AI system just to talk to a human felt like a perfect metaphor for where we are in 2025. Not the triumphant AI future we were promised, but something more complex.

A couple of weeks ago, MIT released a report claiming that 95% of generative AI projects are failing. LinkedIn gurus and media commentators quickly jumped on this, with opinions ranging from "AI is snake oil" to "MIT has lost all credibility." But amid this noise, the real message was overlooked.

I believe what the report actually reveals isn't that AI is ineffective, but that we're addressing the wrong problems and implementing AI where it simply doesn't belong.

Take Taco Bell as a perfect example. Drive-through ordering functioned decently well before. Was an AI "solution" the right solution?

The 95% failure rate becomes entirely logical when you consider that companies are attempting to automate interactions that customers genuinely value, or trying to solve non-existent problems.

The Copycat Trap: Popular ≠ Applicable

The dangerous pattern playing out across the industry is simple:

See success → Copy workflow → Miss context entirely.

Companies observed ChatGPT's massive adoption and hastily concluded they needed chatbots everywhere. They watched AI image generation go viral and shoehorned AI into design workflows where it created more friction than value.

The MCP (Model Context Protocol) server trend perfectly illustrates this trap. Companies rushed to release MCP servers to ride the AI hype wave, but end users aren't adopting them because they don't solve real pain points. It's classic "solution looking for a problem" syndrome—like building mobile apps that merely replicate desktop functions without considering whether users actually need a mobile interface.

We're still early in the AI adoption curve. Effective use cases are being discovered through experimentation, not through corporate strategy sessions. What works brilliantly in one context often fails completely in another.

A clear fatigue cycle is unfolding before our eyes.

The novelty phase ("Wow, AI can take my order!") quickly turns to frustration ("Why is this harder than talking to a person?"), then active resistance ("How do I bypass this system?"), and finally rejection ("I actively avoid AI options when available").

When people order absurd quantities to overwhelm the AI and force human connection, they're providing valuable feedback about the mismatch between company intentions and customer preferences.

From my experience, successful AI adoption is deeply personal and contextual today. Each person's relationship with AI is unique. I might use AI for writing help, you for coding assistance, someone else for image editing. No two workflows are identical, yet we keep trying to force one-size-fits-all experiences onto diverse use cases.

Listening to Resistance

The path to effective AI integration perhaps lies at the intersection of technology and human experience. When we observe the most effective AI systems in action, certain patterns emerge:

They acknowledge human agency by preserving choice. They address genuine friction points rather than manufactured ones. They empower users to determine their level of engagement. And perhaps most importantly, they often operate behind the scenes, enhancing experiences without demanding attention.

As we continue to explore this landscape, returning to first principles will help us discern where AI creates genuine value versus where it merely adds complexity.

Every technological revolution faces this recalibration moment when initial hype encounters practical reality. The dot-com bubble burst refined the internet. Early smartphone limitations shaped mobile computing. Enterprise resistance transformed cloud adoption. Now AI faces its "water cup moment".

And perhaps that's exactly how it should be.

What has been your experience with AI integrations? Have you encountered situations where AI creates more friction than it solves? Or have you found yourself trying to bypass an AI system to reach a human? We would love to hear your stories. Reach out to us plainsight@wyzr.in

Thanks for reading this edition of Plain Sight! If you found it valuable, please share it with friends who might enjoy it.

What we are reading this week

“With more data, we’ll have more correlation, but not more causation.”

As someone deeply involved with data, Nate Silver is one of my favorite authors. His book The Signal and the Noise explores deep questions about data and its utility. Though the book is more than a decade old, Silver's ideas and frameworks remain timeless.

Until next time.

Best,

Amlan